Not a development blog for a game similar in concept to Dwarf Fortress. New yet unnamed game info incoming. And about dwarves.

Saturday, March 31, 2012

Monday, March 19, 2012

Filler - Splat

Yup, still totally stuck!

In an attempt to get a few things done I will try to regroup, focus on a few things, simplify and cut corners everywhere and reuse existing code and features from whatever resources I can find.

Terrain is very dependent on texturing, not just because of how it will end up looking, but because the kind of terrain you have determines what you can do with texturing, thus forming a vicious circle.

And the tile based texture atlas terrain does not mix well with what I want to do with the terrain. Plus is it ugly.

To make up for lost time and to make implementing terrain easier I am going with a much more streamlined vertex structure: as easy as it gets, with the minimum possible vertices and an index list. This in contrast to the more complex terrain I had implemented. Stretching a single texture (or multiple large ones) over such a simple mesh will end up looking bad any time you view it from even normal distances. Only when viewing it from far will it look not blurry.

So I learned texture splatting:

The idea is to take the stretched out blurry texture and splat on top of it a few other highly tiled textures. This is done on the fly by a pixel shader. The only input this shader takes is a special bitmap that gives information about where and how much to splat and the textures that are going to be blended together. In the above video both the heightmap and the splatter-alpha map are only 256x256, while the textured that are used for detail are higher resolution.

In theory this is a simple process and I have fully understood the shader. In practice things are far from smooth. Irrlicht has a 4 texture limit. I think you can get it to support 8 somehow, probably by recompiling. So one texture is the alpha map, and 3 textures used for detail. There is no room for the master stretched out terrain texture, so the above video uses only the detail textures without a master texture. Great, more obstacles!

Also, because I am using a custom shader, built in lighting does not work, so I'll have to merge my terrain splatting shader with my lighting shader.

In the spirit of code reuse, I have used the built in terrain class from Irrlicht, rather than simply my vertex setup model and implement terrain LOD switching. While not really visible in the video, the terrain switches dynamically in complexity based on distance. And it is a good thing that you can't notice that it does this. A slight modification was done to the terrain behavior from that class in order to not apply the default detail mapping but use a shader.

I'll use that class as a learning tool, maybe even adopt parts of it into my code.

Friday, March 9, 2012

Screens of the day 24 - One light to rule them all

I must confess I am kind of stuck on the caves. There are at least half a dozen ways to do it and none of them is without any disadvantages or easy to implement. Caves are the hardest task I have encountered up to this moment. Path-finding is hard, but I am not at the stage where it becomes tricky because I still limit it to 2D. Lighting is hard, but this comes down mostly to my inexperience with it. The real technical challenge is coding around the hardware limits on the number of lights. This will probably be the biggest issue that I will ever encounter, but for now that spot is resolved for caves.

I also read a lot about the marching cubes/tetrahedrons method. It is a very interesting method that mostly comes up in research projects and looking extremely promising yet meanwhile abandoned projects. It is also almost always either poorly documented or using a far too scientific language. Let's face it: with the wealth of information out there, if you are having serious and lasting problems with midpoint-displacement or Perlin noise terrain, then one probable cause could be that you are not cut out for programming (or are just a beginner). But not so for marching cubes. While the basics are fairly simple, solving the very common ambiguity problem and making seamless LOD switching is hard as ******** *** ******* ****.

So while I am not done yet with caves, terrain has been improved. Selection works again, there is a single cell focus "cursor" and mouse movement now tracks the top of the surface, whatever that is, making it a lot easier to navigate and select stuff.

Until I manage to do something meaningful with the caves, I need to write about something, so it's filler time again. This time: shaders!

I get the impression that Irrlicht's support for shaders is not the best in the world. Here are some observations:

- Still can't get global variables to take on the value they are initialized with. I have to set these values manually from C++ code. Not a big issue, but I see code out there that does not need to do this. Today we live in pretty XNA dominated era: the C# game centered library for Windows and Xbox. From what I saw, I really like XNA. It is like DirectX on crack (the positive interpretation of that statement; not the addicted to drugs one)! You can find a ton of XNA resources out there and a lot of what I have learned about shaders comes from there.

- There is no support for the "technique" section in the shader sources. This is quite problematic because you can't just use a shader file to create post processing effects, deferred rendering and other composition techniques.

- You set shader variables (actually they are called constants) on a shader type basis, that is you need tot specify the name and know if the variable is a vertex shader or a pixel shader one. Setting a pixel shader variable as a vertex shader one (and vice-versa) generates an error and wrong rendering. But what if the variable is shared between the two shaders? Experimentally I found that I need to set them both for it to render correctly. Again, there is little to no indication found in Internet samples about having to do this.

These caveats not withstanding, I managed to get per-pixel Blinn-Phong lighting working, but only with one light:

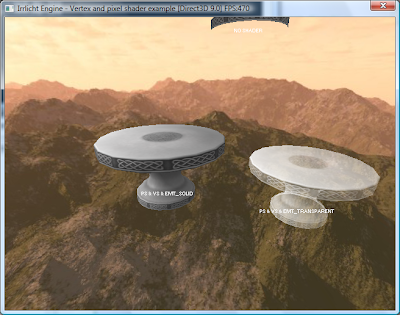

Above you can see a sample. Bottom left is a shaded table. Bottom right a shaded table with transparency. flying higher you have a non-shaded non-lit model. You can't see it here very well, so let's take a look at the same scene from another view-point:

It is not really fair to compare a non-shaded non-lit model to a shaded one, but you saw how these models look with fixed pipeline lighting in the past, either using a two or tree lights setup. BrewStew suggested to make the table more bright for outside lighting conditions, and this is as bright as it gets:

Now this is per pixel lighting, meaning that every single visible pixel should be effected by lighting. In the future front-to-back ordering for rendering should be implemented to speed up the rendering. Light can be directional, point or spotlight shaped, and have ambient, diffuse, specular and emissive components (emissive is ignored for now because I don't need it and no use slowing down rendering even more). Materials also have diffuse, specular and ambient reaction values, together with a global ambient color. So the lighting model should be compatible with both the fixed pipeline lighting system of the GPU and things you can get in a 3D modeling program. I tested the values a lot. Global ambient is a little bit weird and so is specular. Specular not only creates those very shinny highlights that everybody want from specularity, but also globally shades the object based on the direction of lighting. This means that you can't put shininess to zero if you want no highlights, but instead need to set also the specular parameter of the material zero too or risk having the object rendered incorrectly. Playing around with the parameters I managed to get this very ugly and hard pseudo-self-shadowing to render:

Very ugly, but it is free, as in it does not take more time to render like this.

The problem is that this is an one light setup. Having object textured helps reduce the problems with such a setup and some shapes are better suited than others. But take a look at this untextured column to see the problems with a one light setup:

The side that faces the light has shading, but the shadowy areas are completely "flat".

I played a lot around with the parameters and got a lot of results, like this one:

Except for the one light limit there is one more problem: vertex colors are too washed out. Let's try and use a pure red vertex coloring and see how it looks:

The unshaded object is very open about its coloring, blasting away with its very explicit red coloring. The shaded object only takes a hint about its reddishness, becoming slightly pink. You can improve this with lowering the ambient material parameters:

The problem is that this makes the unlit areas of the object too dark:

A solution is to modify the color of the light and make it red:

Now you can play around with brightness:

This approach has one serious disadvantage: performance! This engine has been about one think from day one, and one thing only: huge amount of objects in scene with smooth performance. My engine does not care about coloring: it is free. You can have all objects in the scene have a single color or all a random color (from a limited pool of available colors). Even if you distribute colors with the worst possible mathematical probability to maximize color change, the engine doesn't care. Now, if I were to shine a different light on each object to color it using pixel shader the rendering performance would become dependent on the color distribution. Having just a few colors with large chunks colored the same would have a minor impact. Using the above mentioned worse case distribution would reduce performance. And I am not talking about 2-3 times lower performance. More like 30-100 times lower framerate. And this if we consider that rendering per-pixel lighting is free. Just having a large number of colors with worst case distribution and zero cost shaders would drop the performance a lot.

So instead I'll try to implement a dual light shader and see if we can get vertex colors to shine though like that. Let's see how that works out first.

But still, I managed to get some interesting results. Here is a metal shader I created:

And seen from above:

So... yeah... filler...

Saturday, March 3, 2012

81 – Dig(gity)

I had this post so long on mind that I forgot half what I was going to say, so abridged version recapping the week:

I started working on caves and managed to implement a very powerful but very complicated solution. Any convex surface without an underside could be used to create caves underneath if it was high enough. Increase the complexity a little and I'm sure I would have wound up with full boolean mesh operations. Early on I realized that this is far too complicated for what I need but I still finished the implementation, tested it, saw that it was great and immediately deleted it.

I think that a simpler design and terrain overall would benefit my game. I scaled back a lot on the complexity until I reached a fair compromise between ease of creation and what can be done with terrain. The time spent creating the complex solution means that Snapshot 9 will be a little bit behind feature wise: no level switching, no slicing and no caves.

The first good reason to have relatively simple terrain is that terrain is often just eye-candy. You take a look at it, go "WOOOOOOOOOW" and then you spend 99.99% of your time staring either at a horizontal section though the landscape or and underground level.

The second good reason is that complicated terrain requires complicated tools. I could create a full on set of terrain editing tools for the GUI, but this is not really the scope for the game.

Instead my focus was to create an accesible terrain that looks good and is very natural, both initially and after you interact with it. In my last video I have shown and early digging prototype and that had very straight edges around the part that was removed. I don't like that because it brings back memories of the cubes. MUST... AVOID... PUBES... JOKE... I want softer surfaces and I'll show what I came up with at the end of this post, but first something has to be done about the way the landscape looks.

While there is plenty of detail in the map, because of the flat shading you can barely tell what is going on. Using Irrlicht's built in normal calculator I tried to add definition to the landscape. The result did not turn out too great. I obviously needed something more powerful, a solution implemented by me, but by using this first I learned a ton about normals, Irrlicht implementation for them, how Blender exports them and why using flat shading requires more vertices that smooth shading. Here is the result of the Irrlicht solution:

While there is plenty of detail in the map, because of the flat shading you can barely tell what is going on. Using Irrlicht's built in normal calculator I tried to add definition to the landscape. The result did not turn out too great. I obviously needed something more powerful, a solution implemented by me, but by using this first I learned a ton about normals, Irrlicht implementation for them, how Blender exports them and why using flat shading requires more vertices that smooth shading. Here is the result of the Irrlicht solution:

This solution, while far from perfect gave the required detail to the landscape. It also highlighted some problems with terrain creation. When I learned midpoint displacement I remember reading about rectangular artifacts that get carried up and create undesired pyramidal structures. I knew about this bug but for a cube world it was never a problem.

So the next step was to write a powerful terrain generator class to handle all these tasks, using the same algorithm as the above one, but replacing square midpoint-displacement with diamond-square midpoint displacement. And since I needed normal calculations, I made the class provide these too. The class not only works for discrete values, i.e. each point in the heigh-map, but can provide the correct values for height and normals for any floating point coordinate using interpolation. Currently the class only supports discrete and interpolated height and normal operations, but on a need by need basis I'll add support for other useful values for a 3D engine, like tangents, binormals, bitangents and bisexuals.

The next step was to improve lighting. Correct normals and bad lighting does not a pretty map make. I learned a ton about lighting in general. How did I do that? By trying to learn shaders. This is how you learn shaders: you start of with a "neutral" shader, one that simply converts from logical 3D coordinates to coordinates the GPU can use using the world-view-projection matrix and fill the areas with a single hard coded color. This is the most basic shader that you really need to fully understand. Then you start expanding upon it, adding ambient color, texture, directional lighting, specular highlights, normal mapping, etc. I am not ready yet to write my shader lessons, but I'll get there. First I need to solve a problem: out there on the Internet people often write shaders that have global variables initialized with some value. Pretty straightforward. Yet when I use those shaders, the initial value is never assigned and is instead zero, resulting in incorrect rendering. I need to figure out first why these values work for other people and not for me.

Using my new knowledge I created a new lighting model that only uses two lights. This might solve the problems with Irrlicht's default PS 1 shaders that only support two lights for some effects. The new model is far from perfect, and I am still iterating upon it, but this was a good result:

I further tweaked terrain generation, digging softness and lighting.

Terrain generation is done. As in the shape of it. I will not improve upon it more. Not all randoms maps are equally good, but I haven't found a single bad one yet. The difference between them comes down to subjectivity. I like hilly/rocky landscapes, but some might enjoy smoother ones. The random terrain generator creates both.

Lighting for terrain is also done, at least until I switch from fixed pipeline lighting to a terrain shader.

The only thing left is texturing. A new texturing scheme will come creating more realistic and modern looking landscapes, but not now.

The terrain generator has a resolution. It creates an amount of discrete points in concordance with that resolution. Increasing the resolution does not simply create a good looking terrain with twice the size and useful data. It creates a terrain with twice the size but half the detail. Every time I change resolution a new set of parameters for the terrain generator must be manually and experimentally determined. It is similar to a picture. Scaling it up won't give you any more detail. You may use some filtering, but eventually you'll need a higher resolution image. So I am going with a fixed resolution terrain.

So I implemented strectching. The terrain data can now be used to create terrain at any scale. Currently the unit borders are aligned with the heightmap to create the best looking map. I have not yet investigated how this looks if they are not aligned.

The terrain is also seedable, as in there are a few values that when reused always create the same terrain. Useful, this way I can always play around with my favorite maps.

Putting it all together we get this:

There are some coloring bugs that I have intentionally left in for terrain. While examining the physics and terrain model you can easily prove that these values are wrong, but I actually like it this way because it gives the terrain a little bit of personality and outlandishness. The texture stretching bugs are not intentional and will get fixed!

Overall this was a great first week for March's experiment-a-thon. A smashing success even. There is absolutely no way I am going back to cubes! Or a 2D engine!

Now just let's hope that I can hack together a Snapshot 9 out of all this stuff!

Subscribe to:

Posts (Atom)